☕ #13: The Semantic Layer Renaissance, The Death of the “Shopping List” Architecture, and The Big/Small Metadata Question

A recipe for an AI-ready semantic layer, the new dynamic of the data stack's tools and categories, and a counter-thesis to distributed metadata.

Hello data friends,

We meet again after a long(-ish) break, mainly due to a busy few months on both the personal and professional sides. As you might’ve noticed if we’re connected on LinkedIn, I left my role at Sifflet and joined Neo4j to work on graph analytics (something I’ve always been passionate about!) - this won’t impact the content of the newsletter: I’ll keep discussing the overall data and analytics space and how to build data products & platforms at scale. (But maybe we’ll have a graph-related “twist” here and there, since I’m getting more familiar with graph analytics use cases.)

Now let’s briefly go through what we’ll cover in this month’s edition: we’ll first discuss the semantic layer renaissance (really, it’s back in style!) and why we need a new approach (other than defining aggregations and joins in YAML) for an AI-ready semantic layer, then we’ll go through how the data stack is shifting into a “hub and spike” model instead of a “shopping list” architecture, and finally we’ll end with a note on DuckDB’s new release: DuckLake.

So, without further ado, let’s talk data & analytics while the espresso is still hot.

The Semantic Layer Renaissance (and why it’s actually different this time)

Back in 2021/2022, during the peak of the Modern Data Stack craze (R.I.P.), the semantic layer felt a bit like… “unnecessary” homework.

In theory it was an essential component, sure, and I was a strong believer in its value - but it ultimately consisted of thousands of YAML lines just to define how customers joins with orders. The promise was “metric consistency,” but deep down, many of us knew it was overkill: The use cases weren’t there yet, and all the semantic layer was doing was adding friction between a BI tool (where analytics lived at the time) and a data warehouse.

The friction was unnecessary because human analysts are smart - they have implicit context. They know not to count test accounts in churn figures. They know who to ask if a number looks weird. Building a massive configuration layer just to save them a JOIN often didn’t feel like high ROI.

But the landscape has shifted.

We are moving from a world of Analytics (humans looking at charts) to Agents (AI taking actions). And unlike human data analysts, an LLM is context-blind: it doesn’t know your business nuance. So if you feed it raw SQL snippets or simple metric definitions, it will hallucinate.

This is where the need for a “new” semantic layer comes in: it’s no longer about optimizing for SQL generation; it’s about optimizing for reasoning. It shifts the job from defining the Math (”Here is the formula for Churn”) to defining the Meaning (”Here is why we track Churn, who owns it, and what business goal it impacts”).

A couple of weeks ago, I wrote a deep dive on Medium about this shift. If you are building for AI, or just tired of writing YAML for dashboards that nobody uses, give it a read: Building a Semantic Layer for the AI Era: Beyond SQL Generation.

The Death of the “Shopping List” Architecture: Why You Just Need a Platform (and a Few Exceptions)

For years, we treated data architecture diagrams as a shopping list - the standard advice was to buy (or build, for the brave souls) a specialized “Best-of-Breed” tool for every single area of the stack: A data catalog, an orchestrator, a data observability tool, etc. But in 2025, with data platforms like Snowflake and Databricks rebundling the stack (via features that cover everything from orchestration to metadata management), the “baseline” requirements for governance, observability, and execution are now built-in - especially given how AI is minimizing the surface area of human involvement in data work. And so if you’re just starting out, buying a dedicated catalog before there’s a concrete need for it is definitely not the best place to invest money or resources.

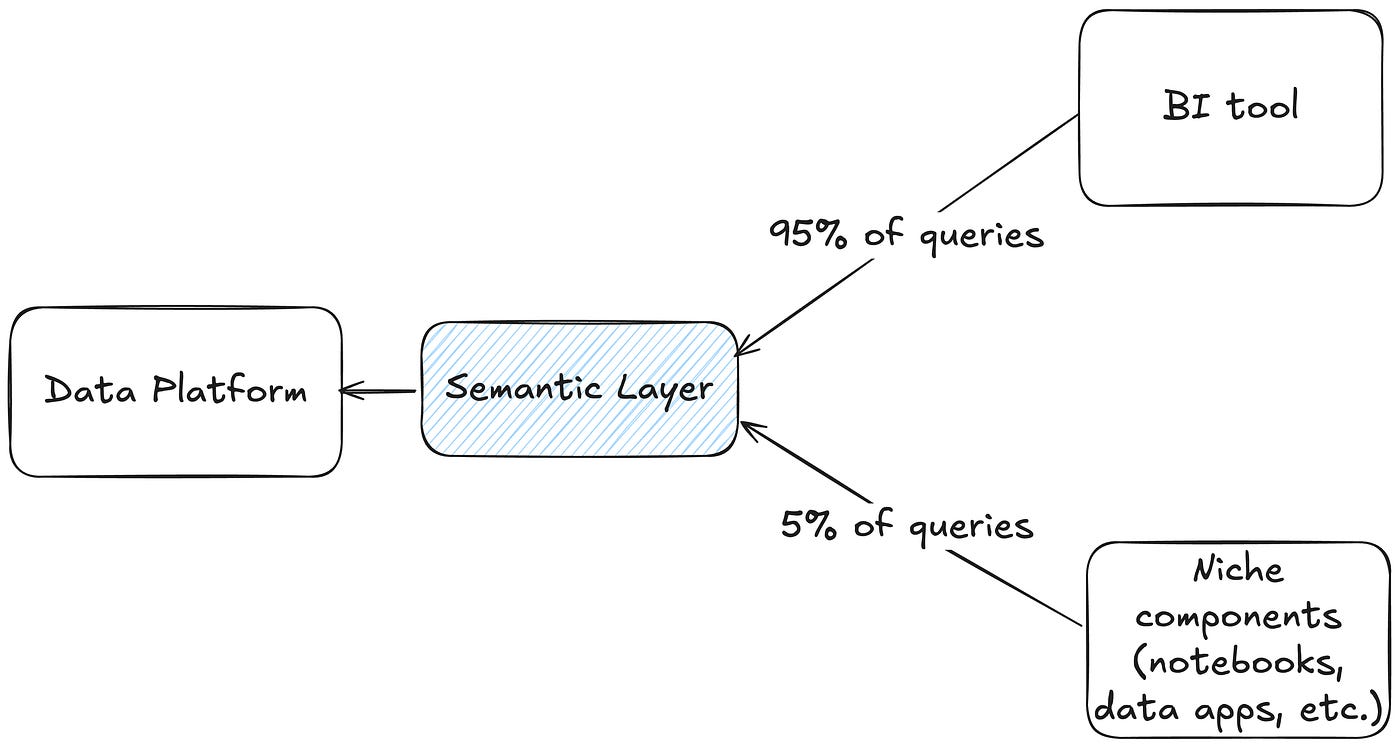

Instead, we are moving toward a “Hub and Spike” architecture. The “Hub” is your massive, all-encompassing platform that handles the boring, day-to-day utility work. The “Spikes” are the specialized tools you buy only when you hit a specific, wall-sized problem that the platform can’t solve - like orchestrating a job across an on-prem legacy component and Salesforce, or governing 20 years of legacy Oracle data.

This marks a subtle but critical shift: Yesterday, we bought tools to build a Stack. Today, we buy tools to fix a Spike. You no longer buy a tool just to “fill a category” (e.g., “we need a catalog”); you buy it to solve a bounded, painful edge case that your central hub can no longer contain. In this context, my recommendation (for most cases) is the following: start with the platform, and earn the right to buy the specialist.

The Duck Is Out of the Lake: What if Metadata Doesn’t Need to Be Distributed?

DuckDB disrupted the data industry a few years ago with a simple yet brilliant observation: most “Big Data” isn’t actually big. They proved that for 99% of use cases, data fits on a single machine, so using a distributed cluster makes no sense (to an extent). Now, with the introduction of DuckLake, they are applying that exact same philosophy to the Data Lakehouse architecture.

While the new wave of distributed table formats (hi Apache Iceberg 👋) treat metadata as “Big Data” (scattering it across thousands of distributed files in object storage), DuckLake asks the obvious question: Why distribute the metadata if it doesn’t need to be distributed? Their approach keeps the data distributed (Parquet in S3) but centralizes the metadata in a standard, ACID-compliant database. It recognizes that while your row count might be massive, your catalog operations are almost always “small data.”

This adds a much-needed nuance to the stack, challenging the “distributed everything” default that dominated the Iceberg/Delta/Hudi conversation. It’s a healthy correction towards simplicity that aligns better with how most teams actually operate. If you want to dive deeper into why centralized metadata might be the future (again), MotherDuck’s CEO Hannes Mühleisen gave a fantastic talk breaking it down.

Hope you enjoyed this edition of Data Espresso! If you found it useful, feel free to share it with fellow data folks in your network.

Feedback is always appreciated as usual, so feel free to share your thoughts in the comments or reach out directly – I’d love to hear your take on the edition’s topics!

Until next time, stay safe and caffeinated ☕

Fantastic framing of the analytics-to-agents shift and why semantic layers matter now. The point about LLMs being context-blind hits hard, humans carry implicit knowlege about test accounts and data quirks that agents need explicitly encoded. The Hub-and-Spike model is basically what actually happens in practice anyway, companies just pretend they followed best-of-breed from day one when really they accumulated spikes after hitting walls.